This article is part of the ‘New Beings’ series of articles from Zenitech and Stevens & Bolton, examining the practicalities, issues and possibilities of using AI in development. In this article, we look specifically at Test Automation.

Background

I’m a QA Engineer who uses automation tools to support testing activities.

Some tooling requires programming skills. I have some, but I’m not a specialist in that area. I collaborate with developers – I really like neat solutions, so when I feel that there is a better way to implement a solution, we work together to find it. I explain my challenge, ideas and doubts, and then together we come up with alternatives, discuss pros and cons, and I take it from there, with developer support if needed.

I was wondering if an AI assistant could support us in this process, so I started using ChatGPT for some daily test automation activities. This article explains what I found. Later in the article, I move away from the ChatGPT chat interface and explore some plugins, which could become part of a more deeply integrated developer workflow.

Attempt to use ChatGPT

In this section, I describe how I used ChatGPT for one of the test automation tasks. I needed to expand an existing test automation solution to have additional capability – accessibility testing. We decided to use the axe-core library to scan web applications for accessibility defects. Axe is an accessibility testing engine for websites and other HTML-based user interfaces.

The existing automation solution is written in TypeScript. It uses CodeceptJS as a framework backbone to interact with systems under test: web, Android app, iOS app and backend APIs.

Asking for steps to solve the task, I mentioned some context and technologies and important keywords: axe-core, accessibility, automation, existing, CodeceptJS.

Q: Please provide me steps to start using axe-core for accessibility test automation in an existing CodeceptJS project

The chatbot provided instructions of seven steps.

Steps 1-3 related to CodecepJS setup from scratch. I was willing to prevent this kind of information by highlighting that the project already exists.

The bot suggested creating a new CodeceptJS helper class AxeHelper.

It makes sense because that’s the way CodeceptJS is extended, according to the documentation. The helper code structure was OK, the bot even provided configuration to successfully integrate helper to the solution.

Finally, I used the helper in a test that opened the homepage and used the function runAccessibilityChecks to scan the web page.

I liked the approach to not only provide a solution but also use it in an automated test context.

The bot used npm to install the axe-core library. I asked to convert the instructions to yarn, which was an easy task for the bot.

Unfortunately, to “Install axe-helper” chatbot suggested step 3 which returned an error:

Command: npx codeceptjs def --codeceptjs

Error: error: unknown option `--codeceptjs'

def command updates TypeScript definitions (steps.d.ts file) for IDE to provide autocompletion. Parameter codeceptjs is not mentioned in the documentation.

I reported the error to the chatbot. It apologised and suggested a different approach: “`–codeceptjs` option is not valid for the `def` command in the latest version of CodeceptJS. Instead, you can directly install the `@codeceptjs/axe-helper` package”.

Now in step 3 the first line of helper code is:

const { AxePuppeteer } = require('@axe-core/puppeteer');

For context, CodeceptJS is driver agnostic, ie. the user can choose and change the test driver via configuration. For now, tests can use Playwright, WebDriver, Puppeteer, TestCafe, Protractor, Nightmare, Appium (and Cypress is planned for the future). WebDriver for web tests and Appium mobile native tests were chosen in the existing project.

The chatbot decided to suggest using Puppeteer. I can see two options in my project for using Puppeteer.

The first option, changing the test driver to Puppeteer, would mean I’d need to change and test the configuration of the web tests and to rewrite a couple of custom functions implemented using WebDriver: getBrowser, checkCheckbox, goBack, clearReactField, fillClearField.

A key risk of this approach is limited browser coverage. Puppeteer supports Chrome, Chromium and Firefox, WebDriver supports: Chrome, Firefox, Microsoft Edge, Internet Explorer, Safari. A further risk would be unexpected test failures, which would be hard to estimate.

The second option, using Puppeteer for accessibility tests only, would mean creating an additional configuration file to use Puppeteer for accessibility tests and configuring pipelines to run accessibility tests separately.

But this would mean more complexity in the automation solution and in the pipelines.

It is true that axe-core has Puppeteer @axe-core/puppeteer.

Also, CodeceptJS has a community helper codeceptjs-a11y-helper to use axe-core easily but it’s based on another driver – Playwright. Using codeceptjs-a11y-helper would lead to the same option 1 and option 2.

The instructions I provided didn’t work. If only the chatbot could provide a solution using WebDriver. So, I provided more context: “I’m using webdriver as a browser automation library”.

The beginning of the response is promising: “If you’re using WebDriver as the browser automation library in CodeceptJS, you’ll need to make some adjustments to the helper code. Here are the updated steps”. And provided adjusted 7 step instructions.

Step 3 leads to additional thoughts. First line requires to import @axe-core/puppeteer and the following code contains both WebDriver and Puppeteer keywords:

this.axe =

new AxePuppeteer(this.helpers['WebDriver'].browser);

I was confused, so asked “Is it OK to use AxePuppeteer in a WebDriver based project?” Chatbot apologised for the confusion and provided code containing only axe-core and WebDriver. Great, seems that we’re on the same page. Before running the code I asked to convert it to TypeScript.

Step 3 causes errors again. It was spotted by my IDE immediately. The chatbot wants to import variables that are not used later. Moreover, the codeceptjs module did not have them as exported.

'LocatorOrString' is declared but its value is never read.ts(6133)

Module '"codeceptjs"' has no exported member 'LocatorOrString'.

'TestExecutionContext' is declared but its value is never read.ts(6133)

Module '"codeceptjs"' has no exported member 'TestExecutionContext'.ts(2305)

I removed the unnecessary variables without thinking much and ran the code. Again an error occurred:

TypeError: HelperClass is not a constructor

The chatbot tried to fix it – adding a constructor. Now I see where TestExecutionContext was intended to be applied. The constructor looks like this:

constructor(config: TestExecutionContext) {

super(config); // Initialize axe-core

this.axeInstance = axeCore;

}

As I mentioned, TestExecutionContext is not exported in codeceptjs module. So the error again:

Module '"codeceptjs"' has no exported member 'TestExecutionContext'.ts(2305)

The chatbot admitted that “the `LocatorOrString` type and `TestExecutionContext` interface are not necessary for the `AxeHelper` implementation” and simplified the code.

In the updated code constructor has a parameter config: any. I was starting to lose my patience as I got the same error:

TypeError: HelperClass is not a constructor

The chatbot suggested a different approach – use axe-puppeteer. This package was deprecated on Mar 19, 2021 and the latest version is in @axe-core/puppeteer.

So, it suggested Puppeteer again.

It was disappointing because I thought we’ve already discussed Puppeteer usage with chatbot, and agreed not to use it. I did not expect to need to remind the chatbot of our conversation. I assumed that all the answers are generated taking into account chat history.

So, I replied, with slight disappointment:

“Reminding that I’m using WebDriver, not Pupeeteer…”

A few more rounds of questions and answers that did not lead to a working solution, so I decided to go through axe-core documentation and implement my own solution.

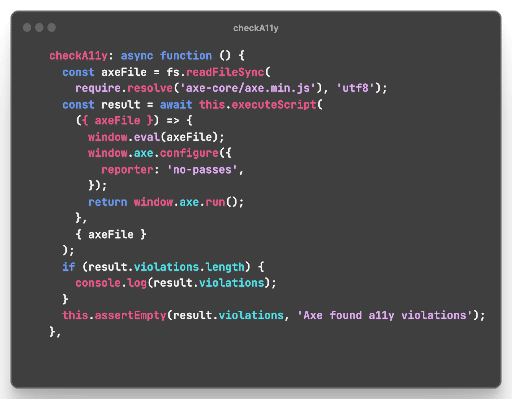

My solution

The solution is not perfect (for example, failure reporting could be better) but it’s good enough to try out how CodeceptJS works with axe-core: scan the web page and preview the results. Some key aspects of the solution were:

- axe-core file is being injected to the browser by using the CodeceptJS function

this.executeScript(). - axe-core can have configuration; in the solution it is configured to report only failures.

- If any accessibility violations are detected by axe-core, the test is failed.

Observations and Conclusions

- I wouldn’t expect a chatbot to solve all the problems or complete all the given tasks, especially niche tasks that were described in this section. It has the potential to become a better assistant and optimise everyday work by saving time, brainstorming ideas and improving quality, though.

- It needs to provide exact instructions to the user. If instructions are correct, the user has a better chance of succeeding.

- It provides context and explanations around instructions, which is educational for the user.

- It tries to act like a human by:

- Rephrasing the question and communicating how it understood the question.

- Showing confidence, e. g. using the phrase “Certainly!”

- Apologising for any confusion.

- The chatbot is good at converting human language to code and converting JavaScript code to TypeScript. I expect that we’ll see rapid evolution in AI’s ability to convert human language into code and it’s ability to convert one programming language into another.

- The chatbot does not always take into account chat history and user feedback. It results in proposing a similar solution that was previously acknowledged as not good enough. I think this part may evolve and be improved in the future.

Trying out AI driven testing tools

I’m interested in how artificial intelligence has affected software testing tools and how it’s solving testing and automation challenges.

Asking ChatGPT 3 to provide AI based testing tools

I decided to go through two sources that would generate a list of testing tools that are using AI: ChatGPT and Google.

Q: Give me a list of software testing tools based on AI

A: Here is a list of some popular software testing tools that use AI (Artificial Intelligence) and ML (Machine Learning) technologies:

- Testim – A cloud-based platform that uses machine learning algorithms to create and execute automated tests for web applications.

- Functionize – A platform that uses AI to automatically generate and execute functional and performance tests for web applications.

- Applitools – A visual testing platform that uses AI to compare screenshots of application UIs to identify visual changes.

- Mabl – A cloud-based testing platform that uses machine learning to automatically create and maintain test scripts.

- Test.ai – A platform that uses AI to generate test cases and execute tests for mobile applications.

- Diffblue – A tool that uses AI to automatically generate unit tests for Java code.

- ReTest – A tool that uses AI to automatically create and execute end-to-end regression tests for web applications.

- Appvance IQ – A platform that uses AI to generate and execute functional, performance, and security tests for web applications.

- TestCraft – A platform that uses AI to automatically generate and execute automated tests for web applications.

- Functionize – A platform that uses AI to automatically generate and execute functional and performance tests for web applications.

Note that this is not an exhaustive list, and there are many other software testing tools that use AI and ML technologies to improve the testing process.

Google query: software testing tools based on AI. Found additional tools:

- askUI

- Virtuoso

- Perfecto Scriptless

Testim

Testim is a tool for web and mobile e2e tests.

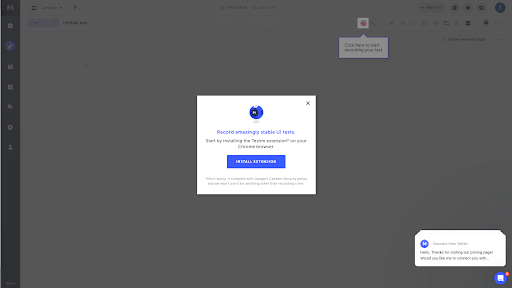

It is built to use a capture/playback automation approach: record test steps from the user interface (capture) and then run the sequence in the cloud. Of course, the sequence can be easily modified to maintain the tests. Testim uses AI to fix UI element locators.

Sign-in was easy, I could use my Google account (Github, Azure AD are also available). The trial version included a wizard leading the user to Testim Editor Chrome plugin to capture test steps.

I had a bit of a struggle after Testim Editor installation, though. Testim didn’t see that the plugin was installed already. I couldn’t see how to exit the wizard – a single action is available “Click here to start recording your test”. After, the page reload wizard wasn’t available anymore.

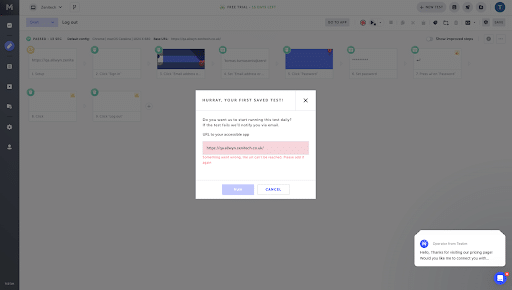

It took me one minute to create a simple test: sign in/sign out. (Although I faced an issue when saving the first test). The web application was accessible via VPN, so I guess that may have caused the error. The error message wasn’t informative, just: “Something went wrong”, with the suggestion to try again.

I tried out the application, which is publically available, and test saving worked well.

Testim also has a few learning resources: events, webinars, blogs and training materials.

askUI

askUI is about solving maintenance problems with selectors in UI automation by using modern deep learning technologies – it identifies UI elements based on visual features and takes screenshots.

askUI provides instructions and videos on how to install the tool. It was quick and easy to set it up. It works by connecting to the operating system controller and controls the mouse, keyboard, and screen.

This is the controller import statement:

import { aui } from './helper/jest.setup';

Firstly, it needed to scan the screen and identify and index UI elements in order to use it in tests. For that there is a function:

await aui.annotate();

I was surprised that after attempting to run the first example test, my IDE Visual Studio Code asked permission to record audio. I refused to give permission and the result of the first run (scanning the screen) was an html document containing my 2 empty desktop screens. I used 2 monitors: on one I had IDE open; on the second, I had Chrome browser with application under test open.

Test.ai

Test.ai was presented as one of the first AI-driven testing tools as part of the “AI for User Flow Verification” presentation during the “AI Summit Guild 2018” conference. ChatGPT 3 outlined this tool as well.

Unfortunately, the Test.ai website looks like a placeholder. I couldn’t find any information on how to start using it, either on the site or via Google Search.

ReTest

ReTest has a few products related to test automation. One of them is AI-Based Test Generation which I’m currently interested in.

On the website I can see the field “Enter URL to test” and the button “Generate Tests” next to it. It seems that ReTest is about to generate tests for my application under test autonomously. Unfortunately, my URL https://books.zenitech.co.uk/ an error: ERR_CONNECTION_REFUSED. The same thing happened with https://www.google.com/.

TestCraft

TestCraft website redirects to another domain – perfecto.io. Apparently, TestCraft became a part of Perfecto Scriptless solution. TestCraft LinkedIn page says “We’re Moving! TestCraft is part of Perfecto by Perforce!”.

Meanwhile, there is a blog post welcoming TestCraft in Perfecto website: “It’s in this context that I wanted to welcome the TestCraft team to Perforce. Perforce has closed the acquisition of Testcraft, expanding our already close partnership to a full team member.” There are also links to try out TestCraft leads to Perfecto Scriptless.

Mabl

The Mabl automation solution looks promising because of its breadth of functionality, including must-have functions like cross-browser testing, api testing, data-driven, mobile. It also provides capability of some non-functional testing such as accessibility and performance.

Mabl has documentation, tutorials, and blog posts. Also, they organise workshops to train users.

I found it quick and easy to create the first test.

First, I needed to install mabl software to preview the control panel. The wizard guided Mabl uses a capture/playback automation approach – it records steps on a browser and is able to re-execute it during test runs (you need to install Google Chrome to capture the steps).

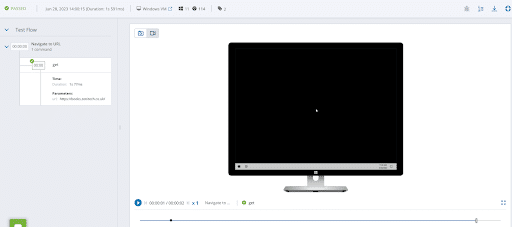

Perfecto

The tool welcomes you with a suggestion to choose a guide for manual or automated testing.

After selecting automated testing, I needed to enter the platform, URL to test, and language to generate the script. Available languages included Java, JavaScript, Python, Ruby, C#.

The initial code was generated instantly, and the wizard suggested downloading the project and running it. IDE asked if the authors of the code were trusted. Perfecto ran the first test in the cloud – simply navigating to the URL. After that the report opened in the browser (although, in the recorded video of a test I could not see the browser).

Perfecto has a variety of testing solution capabilities, such as capture/playback for manual testing, tests as code (multiple programming languages) for automated tests, testing on various platforms including mobile devices, performance testing and other useful features.

Functionize

Functionize is a web automation platform, but the trial is available only after organising a catch up with Functionize representatives.

It seems that AI is one of the main selling points, as it is mentioned multiple times in the website. Instead of selectors, deep learning is used to recognise changes in UI and adjust the tests (this is an alternative approach to reduce automated tests maintenance time). The tool suggests the corrections to the tests, so the user controls the boundaries of AI operation. A Chrome based plugin is used to create tests (capture/playback approach).

Functionize has an extensive set of learning resources, such as tutorials, webinars and Functionize certification.

Appvance

Appvance supports web and mobile application testing.

The Appvance demo session is available after scheduling a meeting with the vendor.

Appvance creates and executes tests on its own. After that, it reports any results, defects and warnings. It says that AI covers potential user flows that may be missed by a tester, so the test coverage may increase. Created tests are represented in a human readable format. On the website, you can find case studies of how the tool helped to reduce bugs that were found by users. The documentation is comprehensive and the site provides webinars, events, training and certification.

Virtuoso

Virtuose supports web application testing.

It generates the first set of tests autonomously, but the tester can supplement it with additional tests. Additional tests can be created using natural language, so for example. the user can write a command, e. g. “Navigate to https://books.zenitech.co.uk” in english and it will be translated to JavaScript code.

It also has auto-healing locators – creates a hint for UI elements and AI figures out if the same element has changed locator. Virtuose seems to have comprehensive learning material: documentation, tutorials, webinars, blog, certification.

Applitools

Applitools is a visual validation tool for web, mobile and desktop applications. This tool can be used as a library and it supports multiple languages: Java, JavaScript, Python, C#, Ruby.

It can be integrated into automation solutions based on various technologies, such as Selenium, Cypress, TestCafe, WebdriverIO, Robot, Appium. Applitools uses AI to solve visual validation challenges: comparing screenshot pixel by pixel creates false-positive test results, e. g. when the same test is running on different browsers. AI recognises that the element is the same despite pixel difference.

Applitools has detailed documentation, tutorials, training, blogs, case studies.

Summary

The table below provides an overview of each tool. During testing, I noticed that some tools were not available or I failed to find how to try it out, so the first column represents whether the tool is available. The legal implications around the use of these tools are the subject of a dedicated article in this series, New Beings: Legal issues and AI.

Some tools are entire testing and automation solutions, with a lot of functionality. For these, I’m interested in what it would be like to learn how to use the tool. The second column represents how comprehensive documentation and other learning resources are.

I noticed that some tools have prerequisites to try out functionality. Some of them have barriers to use, such as installing software, having a meeting with the vendor or giving too many access rights. The column “Prerequisites to start” show where this applies.

I used “AI power” to highlight what kind of AI related features the tool has. “Automation approach” to highlight in what way automated tests are developed and “Running tests in cloud” to highlight if the tool has this capability.

| Tool | Available | Documentation | Prerequisites to start | AI power | Automation approach | Running tests in cloud |

| Testim | Yes | Good | Chrome plugin installed | Auto-healing locators | Capture/playback | Yes |

| askUI | Yes* | Good | Node library installed, access to record screen and audio | Finding and using UI elements | UI automation | Yes |

| Test.ai | No | – | – | AI-generated autonomous tests | – | Yes |

| ReTest | No | – | – | – | – | Yes |

| TestCraft | No** | – | – | – | – | – |

| Mabl | Yes | Great | Desktop application installed | Auto-healing locators | Capture/playback | Yes |

| Perfecto | Yes | Good | Download and execute generated code | AI-Driven Analytics | Tests as code, capture/playback | Yes |

| Functionize | Yes | Great | Have a meeting with the vendor | Auto-healing locators, Visual Testing Powered by AI | Capture/playback | Yes |

| Appvance | Yes | Great | Have a meeting with the vendor | AI-generated autonomous tests | AI-driven automation | Yes |

| Virtuoso | Yes | Great | Have a meeting with the vendor | AI-generated autonomous tests, Auto-healing locators, AI assistance | AI-driven automation, Keyword-driven automation (natural language) | Yes |

| Applitools | Yes | Great | Download tool’s code as a library | Visual Testing Powered by AI | Visual validation | Yes |

* some features are Beta: Workflows and Report

** available but as a part of another solution

Conclusion

AI has affected the software testing world already. I found several software testing tools and solutions that were supported by AI at some level. Some testing technologies are driven by AI, with tools like Test.ai and Appliance having AI do the testing. Other tools use AI features to a lesser extent – like Testim and Mabl. For some testing technologies, this is their key selling point. Others powered up some AI features to solve particular testing challenges.

There’s been an uptick in activity around AI and testing tools in the past few years. New tools appeared, and some of them disappeared quite quickly. Existing tools evolved and started using AI. I think a similar pattern may happen in the future.

Currently, AI is used as an assistance technology for testing activities. It helps to tackle some time consuming testing challenges, such as:

- False positive test results caused by brittle tests. It usually happens after changes in software under test user interface. It increases efforts on test maintenance. This risk is being mitigated by

- auto-healing tests – AI used to identify and recognize UI elements

- AI powered visual validation – AI recognises changes visible to human eyes and can be configured to ignore other changes.

- Escaped defects. This risk always exists in complex software. It is mitigated by AI – it may help to brainstorm test ideas and generate tests. AI support in these activities may increase test coverage and save time and effort.

- Need of technical knowledge used to implement automated tests. Capture/playback trend is noticeable – this approach empowers non-technical people to develop test automation. AI may be used to convert natural language to code. I personally prefer keeping tests as code but I see a potential here: time spent on technical test implementation details may be used for other activities, such as generating additional testing ideas.

It’s clear that we’re seeing AI develop in many interesting ways, but it still requires a lot of guidance from an expert. I would say, though, that AI is already something that’s accelerating productivity, thanks to the challenges it helps solve.

Of all the tools I tested, I would trust Testim, Mabl and Applitools to use in production test development. However, askUI, Appcance, Virtuoso, Perfecto, and Functionize need more exploration.

If you’re interested in learning more about our exploration of AI for development, see our previous articles in the ‘New Beings’ series.