This article is part of the ‘New Beings’ series of articles from Zenitech and Stevens & Bolton, examining the practicalities, issues and possibilities of using AI in development.

Introduction

Developers hate unit testing.

Ok, hate is a strong word, but most would probably admit that writing unit tests is a nuisance. The reasons why they find it so tiresome vary, but the time it takes, repetitive boilerplate setup, and the implementation of the actual scenarios are major causes of annoyance.

The problem is solid unit testing is a vital part of software development.

In this article, we’ll explore some of the basic concepts around unit testing and investigate how tools that rely on Large Language Models (LLM) can help make unit testing less tiresome without compromising quality.

What is unit testing and why is it important?

Units are the smallest pieces of code that can be logically isolated from larger pieces of functionality and tested individually. Unit testing refers to writing tests for these small pieces of code.

We unit test to ensure the units work as expected before integrating them with other units. When integrated into CI/CD pipelines, automated unit tests become the first, and one of the most important, barriers against shipping under-the-standards quality to customers.

However, unit testing is often ignored or given minimal attention (either because the product needs to be shipped quickly or it’s a minimum viable product). After all, why spend precious time on it and incur greater development costs?

It’s not worth the sacrifice. Most of the time, neglecting unit testing will result in buggy products and prove costly in time and money to the business.

Projects with functional CI/CD pipelines already have high code coverage thresholds in place as part of their pipelines, as they should. The downside, though, is that the time to value will increase since the unit tests are enforced.

Using AI for test writing acceleration

Luckily, we live in the era of artificial intelligence LLM.

We’re already seeing the impressive capabilities of large language model neural networks. We’ve seen Chat GPT answering complicated questions spanning areas from philosophy to advanced mathematics and, yes, software development.

Language models work by statistically determining what comes next to the query you feed them based on previous training. Large language models are trained on vast amounts of data from various sources on the web, meaning that their answers to your questions will be accurate most of the time.

Code-generating LLMs are trained on specialised datasets taken from popular software forums and documentation and can determine small-scale solutions for software queries. Given that software languages are generally more structured than human language, the statistically determined tokens that answer your queries will probably be more accurate than answers to general questions.

One thing to keep in mind, though, is that the models are trained on datasets that contain potentially obsolete information. Always check the generated code before considering it correct.

Unit tests are software that tests software, which means we can leverage LLMs to speed up unit test writing and eliminate boilerplate and setup code altogether. Code-generating LLMs solutions integrate well with integrated development environments through plugins. Some of these solutions have the capability to grasp the full context of your code and use it to improve the suggestions they make.

Models trained on vast amounts of data, supercharged by the power of context awareness can result in very accurate testing scenarios that fully test your code, easily identifying edge cases and enhancing the code coverage.

Hands-on: Using Github Copilot to test a simple frontend application

For our practical experiment, we chose a simple frontend application that lacked unit tests, and setup on a journey to completely test the application. The application is written in React and manages state with @reduxjs/toolkit. We chose to write the unit tests for the components with jest, enzyme and @testing-library/react-hooks.

TL;DR We wrote 17 test suites, containing 68 scenarios, to reach ~98% code coverage in just under 2h total time, and the time could’ve been shorter.

Our AI assistants were Github Copilot (integrated through the official plugin into a Github Codespace) and ChatGPT.

It’s also worth mentioning that our experience with using both tools to unit test was very limited when we started this experiment. Also, the application we used was not written by us, so the level of familiarity with its logic was also limited, although simple enough to grasp quickly. These reasons may have led to the first tests taking longer than they could have (the very first suite took ~30 minutes to write for a very simple component).

We mostly tried to obtain results from the two AI assistants in parallel, so that we could understand which one could produce accurate results faster.

We fed ChatGPT prompts such as “Please test the following React component, using jest and enzyme”, followed by the actual component code pasted into the dialog box. After a few seconds, it generated the complete test file (mocks included), although not 100% correct all the time. These answers required further prompts for it to correct wrong tests, and eventually resulted in the expected behaviour.

One other difficulty we encountered while using ChatGPT was that, at some point, after a few prompts, we hit what we think was some kind of quota limit, manifesting in general slowness of the tool – which corrected itself after a period of inactivity. The inconvenience was balanced by having a second AI assistant on hand to continue writing the tests.

As opposed to ChatGPT, Github Copilot made autocomplete-style suggestions straight in the editor, and we noticed it mostly suggested a full test scenario at a time, although if we did not accept the suggestion and started to infer a different direction, it went one line at a time until the direction was clear. We found this style of suggesting easier to read and understand. It also lets us correct mistakes on the fly.

Mocking the dependencies was not the first suggestion made by Github Copilot, but it suggested this after the intention of writing a mock was clear.

The power of context awareness made Copilot suggest better suggestions with each test written, in the style that we indicated we preferred, by correcting its initial suggestions.

However, context awareness did not always shine. For example, we had situations where the generated tests were looking for elements named differently than in the actual components (this also occurred with ChatGPT). Also, even if some objects were declared to be of a certain type, the initial suggestion sometimes completely ignored it and contained properties matched by the name of the variable only.

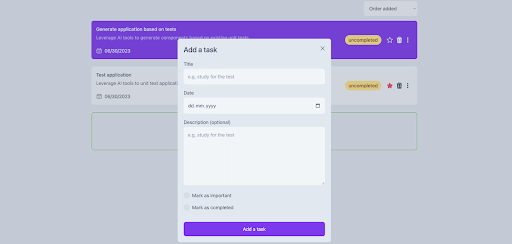

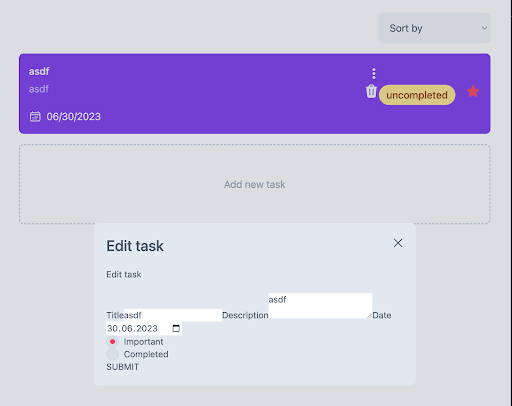

Hands-on: Using Github Copilot to generate a functional application based on previously written tests

We wanted to take this a step further and, with the intention of generating the component’s code based on the tests, went ahead and deleted the code of some components tested at the previous step, following a test-driven development (TDD) pattern. Given that the tests were initially generated based on existing code, and not written based on a solid plan, the results were not great and served more as a starting point.

However, there was hardly any typing involved, and the autocomplete suggestions were pretty good, even if they needed some gentle nudges from time to time. As an example, the initial suggestion for the “Important” and “Completed” fields was a checkbox input, but after importing the custom component, this was suggested. Of course, the generated component was not styled, but still, functional – we managed to create a new task and then edit it.

Legal considerations

There are several legal considerations surrounding AI. Such as who owns the work AI produces, who’s liable for that work (and held accountable), how people’s data can still be protected and what to do about bias affecting work that AI generates.

Governments and international bodies are working out the best way to regulate AI. Right now, there’s a lot to consider.

For example, AutoGPT uses OpenAI’s GPT-4 language model and both it and Chat GPT are covered by OpenAI’s terms – which are governed by Californian law and require mandatory arbitration to resolve any disputes arising from them.

As for Copilot, its terms are governed by laws in British Columbia, Canada and require arbitration under Canadian law.

For now – as our article on legal issues and AI recommends – organisations need to proceed with caution.

Conclusions

Are AI assistants helpful in accelerating unit test writing? Yes.

Can they fully test an application with little to no effort from the developer? No.

While AI assistants can accelerate the process of writing unit tests, they can’t fully test an application without any effort from the developer. The tests generated by AI assistants serve as a valuable foundation, with well-identified scenarios, although not always correctly tested.

AI assistants can prove very helpful for test-driven development, by suggesting solutions that pass the tests with the appropriate supervision from the developer. The initial solutions are not perfect, but this fits with the classic TDD cycle: failing test, passing test, refactoring.

Used correctly, these tools can make TDD easier to implement and help foster a culture of responsibility and solid planning before writing.

AI-assisted unit testing significantly speeds up the development cycle by automating repetitive and time-consuming tasks. Developers can focus their efforts on critical areas, such as planning and bug resolution, which leads to quicker iterations and more efficient software development, ultimately reducing the time-to-value of a feature.

With new releases planned for these kinds of tools, and with ever-increasing capabilities, it’s worth keeping an eye on the ecosystem and trying out the new versions as they appear.

Meanwhile, we hope that when our AI overlords finally arrive, they will spare us for writing this article.

If you’re interested in learning more about our exploration of AI for development, see our previous articles in the ‘New Beings’ series.