Securing AI-generated code: A 'New Beings' perspective

This article is part of our ‘New Beings’ series, examining the role of AI in development. Today, we look at the security of AI-generated code.

If we’re going to establish cybersecurity measures for AI, we have to have a deep understanding of what AI is, and its scope. That has proven to be challenging.

The concept of AI is continually evolving, and there is an ongoing debate regarding its true nature and boundaries. (This lack of consensus is partially attributed to the pervasive impact of marketing strategies associated with the term AI.)

Even within the scientific community, the precise extent of AI is contentious. As a result, numerous forums have suggested various definitions.1

The European Commission introduced the initial EU regulatory framework for AI in April 2021. The framework entails evaluating and categorising AI systems based on the potential risks they present to users across various applications. The level of risk associated with each system will determine the extent of regulatory measures required. This means that the AI Act will regulate the use of artificial intelligence in the EU.

In its draft version, the AI Act proposes a definition in Article 3(1):

‘Artificial intelligence system’ (AI system) means software that is developed with one or more of the techniques and approaches listed in Annex I* and can, for a given set of human-defined objectives, generate outputs such as content, predictions, recommendations, or decisions influencing the environments they interact with.

*The techniques and approaches referred to in Annex I are:

Machine learning approaches, including supervised, unsupervised and reinforcement learning, using a wide variety of methods, including deep learning

- Machine learning approaches, including supervised, unsupervised and reinforcement learning, using a wide variety of methods, including deep learning.

- Logic- and knowledge-based approaches, including knowledge representation, inductive (logic) programming, knowledge bases, inference and deductive engines, (symbolic) reasoning and expert systems;

- Statistical approaches, Bayesian estimation, search and optimisation methods.

AI threats and risk levels

AI empowers automated decision-making processes and significantly enhances various aspects of our daily lives, offering operational improvements and numerous benefits. However, AI systems are vulnerable to a wide range of cybersecurity threats, which means we need to ensure the security of AI itself. There have already been numerous instances of malicious attacks targeting AI techniques and AI-based systems, leading to unforeseen outcomes and potential manipulation of expected results.

There are various threat actor groups with a vested interest in harming AI systems through cyber attacks. These include cybercriminals driven by financial gain, who exploit AI as a tool for attacks and target vulnerabilities in existing AI systems. For instance, they may attempt to compromise AI-enabled chatbots to steal sensitive information or launch ransomware attacks on AI-based systems used in supply chain management.

Insiders within organisations, such as employees or contractors, can pose a risk, either with malicious intent to steal or sabotage AI datasets, or by unintentionally causing data corruption. Nation-state actors and state-sponsored attackers are technologically advanced and actively seek vulnerabilities in AI systems to exploit for offensive or defensive purposes, including intelligence gathering or causing harm to other countries and their critical infrastructures.

Furthermore, terrorists may aim to hack autonomous vehicles, turning them into weapons, while hacktivists, driven by ideology, might target AI systems to demonstrate their capabilities or raise awareness. Another emerging concern involves competitors who display intent to attack rivals, highlighting the need to consider them as threat actors, because of their motivation to gain market share.

It is important to note that while many AI systems pose minimal risk, they still need to be assessed. The AI Act distinguishes a few levels of AI-related risks: unacceptable risk and high risk.

- Unacceptable risk: AI systems are systems considered a threat to people and will be banned (i.e. cognitive behavioural manipulation of people or specific vulnerable groups, social scoring, real-time and remote biometric identification systems).

- High risk: AI systems that can negatively affect safety or fundamental rights (biometric identification and categorisation of natural persons, critical infrastructure management, law enforcement, etc.).

Every AI system categorised as high-risk will undergo assessment both before its market release and continuously throughout its lifecycle.2

Security is of utmost importance when it comes to Generative AI, which has the potential to create and disseminate large volumes of content autonomously. While Generative AI offers remarkable creative possibilities, it also introduces new risks and vulnerabilities. Malicious actors could exploit these systems to generate and propagate harmful or misleading content, leading to consequences such as misinformation, identity theft, or even the creation of convincing deepfakes. Additionally, vulnerabilities in the underlying AI models or data sources can be exploited to manipulate the generated outputs or compromise the system’s integrity.

Therefore, Generative AI – like ChatGPT – needs transparency for compliance purposes, disclosing that a set of content was generated by AI, to protect against issues such as the AI generating illegal content, or publishing summaries of copyrighted data used for training.

According to international sources cited by the European Union Agency for Cybersecurity (ENISA)3, the relationship between AI and cybersecurity can be described as follows:

- cybersecurity of AI: lack of robustness and the vulnerabilities of AI models and algorithms;

- AI to support cybersecurity: AI used as a tool/means to create advanced cybersecurity (e.g., by developing more effective security controls) and to facilitate the efforts of law enforcement and other public authorities to better respond to cybercrime;

- malicious use of AI: malicious/adversarial use of AI to create more sophisticated types of attacks.

Mitigating generative-AI security issues

In today’s economy, AI and large language models (LLMs) have emerged as powerful tools with significant implications. These models have revolutionised the way businesses and individuals interact with language and information.

Large language models (LLMs) are software programs capable of analysing and generating text. They are trained using extensive volumes of textual data, which enhances their proficiency in tasks such as text generation. LLMs form the core of numerous natural language processing (NLP) applications, including speech-to-text conversion and sentiment analysis. These models possess the ability to examine a given text and predict the next words.

Prominent examples of LLMs include OpenAI, Google AI, Deepmind, Anthropic, Baidu, Huawei, Meta AI, AI21 Labs, LG AI Research and NVIDIA. It is important to note that OpenAI (ChatGPT) uses LLMs for code generation.

The Open Web Application Security Project (OWASP), the aim of which is to improve the security of software through its community-led open-source software projects, has launched an OWASP Top 10 for LLM applications. It lists the top 10 most critical vulnerabilities seen in LLM applications, highlighting their potential impact, ease of exploitation, and prevalence in real-world applications. Examples of vulnerabilities include prompt injections, data leakage, inadequate sandboxing, and unauthorised code execution, among others. The goal is to raise awareness of these vulnerabilities, suggest remediation strategies, and ultimately improve the security posture of LLM applications.

OWASP’s top 10 vulnerability types for Artificial Intelligence (AI) applications built on LLMs are listed in Table 1, below.4

Table 1. Major vulnerability types for Artificial Intelligence (AI) applications built on Large Language Models (LLMs)

| No | Vulnerability | Prevention |

|---|---|---|

| 1 | LLM01:2023 – Prompt Injection |

|

| 2 | LLM02:2023 – Data Leakage |

|

| 3 | LLM03:2023 – Inadequate Sandboxing |

|

| 4 | LLM04:2023 – Unauthorised Code Execution |

|

| 5 | LLM05:2023 – SSRF Vulnerabilities |

|

| 6 | LLM06:2023 – Overreliance on LLM-generated Content |

|

| 7 | LLM07:2023 – Inadequate AI Alignment |

|

| 8 | LLM08:2023 – Insufficient Access Controls |

|

| 9 | LLM09:2023 – Improper Error Handling |

|

| 10 | LLM10:2023 – Training Data Poisoning |

|

The proposed attack scenarios are discussed among cyber security experts.5 One practical sample of the LLM functionality is included in the code without any additional security measures: LLM02:2023 – Data Leakage.

Data leakage occurs when sensitive information, proprietary algorithms, or confidential details are unintentionally exposed through the responses of a Language Model (LM). Such occurrences can result in unauthorised access to sensitive data, privacy infringements, and security breaches. Inadequate filtering of sensitive information, overfitting or memorisation of confidential data during the LM’s training, and misinterpretation or errors in the LM’s responses are some of the factors that can contribute to data leakage.

Scenario: An inadvertent situation arises when a user poses a question to the LLM, unknowingly requesting potentially sensitive information. Unfortunately, due to incomplete or improper filtering mechanisms in place, the LLM responds with confidential data, thus unintentionally exposing it to the user.

Noncompliant Code (Data Leakage):

user_prompt = input("Enter your request: ")

response = LLM_model.generate_prompt(user_prompt)

print(response)

Attack Code:

In this example, the LLM unintentionally discloses sensitive personal information of a user named ‘Robert Smith’. Such data leakage can occur when the LLM fails to properly filter or redact sensitive information, leading to the exposure of confidential data.

User prompt: user_prompt = "Please display ID information for user 'Robert Smith'" LLM response: ID No: 111-11111, Personal ID: 0123456789, Sex: M, Exp. date: 2050-01-01.

Luckily enough, there is a way of preventing these vulnerabilities in LLM applications. Preventive methods, proposed by the OWASP are shown in Table 2.

Table 2. LLM vulnerabilities preventive methods

| No | Vulnerability | Prevention |

| 1 | LLM01:2023 – Prompt Injection | • Implement strict input validation and sanitisation for user-provided prompts. • Use context-aware filtering and output encoding to prevent prompt manipulation. • Regularly update and fine-tune the LLM to improve its understanding of malicious inputs and edge cases. • Monitor and log LLM interactions to detect and analyse potential prompt injection attempts. |

| 2 | LLM02:2023 – Data Leakage | • Implement strict output filtering and context-aware mechanisms to prevent the LLM from revealing sensitive information. • Use differential privacy techniques or other data anonymisation methods during the LLM’s training process to reduce the risk of overfitting or memorisation. • Regularly audit and review the LLM’s responses to ensure that sensitive information is not being disclosed inadvertently. • Monitor and log LLM interactions to detect and analyse potential data leakage incidents. |

| 3 | LLM03:2023 – Inadequate Sandboxing | • Implement proper sandboxing techniques to isolate the LLM environment from other critical systems and resources. • Restrict the LLM’s access to sensitive resources and limit its capabilities to the minimum required for its intended purpose. • Regularly audit and review the LLM’s environment and access controls to ensure that proper isolation is maintained. • Monitor and log LLM interactions to detect and analyse potential sandboxing issues. |

| 4 | LLM04:2023 – Unauthorised Code Execution | • Implement strict input validation and sanitisation processes to prevent malicious or unexpected prompts from being processed by the LLM. • Ensure proper sandboxing and restrict the LLM’s capabilities to limit its ability to interact with the underlying system. • Regularly audit and review the LLM’s environment and access controls to ensure that unauthorised actions are not possible. • Monitor and log LLM interactions to detect and analyse potential unauthorised code execution issues. |

| 5 | LLM05:2023 – SSRF Vulnerabilities | • Implement rigorous input validation and sanitisation to prevent malicious or unexpected prompts from initiating unauthorised requests. • Enforce proper sandboxing and restrict the LLM’s access to network resources, internal services, and APIs. • Regularly audit and review network and application security settings to ensure that internal resources are not inadvertently exposed to the LLM. • Monitor and log LLM interactions to detect and analyse potential SSRF vulnerabilities. |

| 6 | LLM06:2023 – Overreliance on LLM-generated Content | • Clearly define the objectives and intended behaviour of the LLM during the design and development process. • Ensure that the reward functions and training data are aligned with the desired outcomes and do not encourage undesired or harmful behaviour. • Regularly test and validate the LLM’s behaviour across a wide range of scenarios, inputs, and contexts to identify and address alignment issues. • Implement monitoring and feedback mechanisms to continuously evaluate the LLM’s performance and alignment, and update the model as needed to improve alignment. |

| 7 | LLM07:2023 – Inadequate AI Alignment | • Implement strong authentication mechanisms, such as multi-factor authentication, to ensure that only authorised users can access the LLM. • Use role-based access control (RBAC) to define and enforce user permissions based on their roles and responsibilities. • Implement proper access controls for content and actions generated by the LLM to prevent unauthorised access or manipulation. • Regularly audit and update access controls as needed to maintain security and prevent unauthorised access. |

| 8 | LLM08:2023 – Insufficient Access Controls | • Implement strong authentication mechanisms, such as multi-factor authentication, to ensure that only authorised users can access the LLM. • Use role-based access control (RBAC) to define and enforce user permissions based on their roles and responsibilities. • Implement proper access controls for content and actions generated by the LLM to prevent unauthorised access or manipulation. • Regularly audit and update access controls as needed to maintain security and prevent unauthorised access. |

| 9 | LLM09:2023 – Improper Error Handling | • Implement proper error handling mechanisms to ensure that errors are caught, logged, and handled gracefully. • Ensure that error messages and debugging information do not reveal sensitive information or system details. Consider using generic error messages for users, while logging detailed error information for developers and administrators. • Regularly review error logs and take necessary actions to fix identified issues and improve system stability. |

| 10 | LLM10:2023 – Training Data Poisoning | • Ensure the integrity of the training data by obtaining it from trusted sources and validating its quality. • Implement robust data sanitisation and preprocessing techniques to remove potential vulnerabilities or biases from the training data. • Regularly review and audit the LLM’s training data and fine-tuning procedures to detect potential issues or malicious manipulations. • Utilise monitoring and alerting mechanisms to detect unusual behaviour or performance issues in the LLM, potentially indicating training data poisoning. |

The significance of LLM security cannot be emphasised enough. Protecting sensitive information, upholding user privacy, mitigating bias, preventing malicious exploitation, safeguarding against intellectual property theft, and promoting ethical use are all vital components of LLM security. By addressing these concerns and implementing strong security measures, we can harness the capabilities of LLMs while minimising risks and fostering confidence in the technology.

AI-related security standards:

Numerous initiatives are currently in progress to establish standards and detailed guidelines focused on ensuring the security and trustworthiness of AI. These efforts aim to create a framework that promotes responsible and ethical AI practices while addressing the unique challenges associated with AI security.

ISO/IEC is working on risk management trustworthiness and management systems; ETSI provides an AI threat ontology and data supply chain security, among other items; and IEEE is working on AI explainability.6

Some of the newest development related standards are listed below:

ETSI ISG7

- ETSI GR SAI 006 V1.1.1 (2022-03) The role of hardware in security of AI

- ETSI GR SAI 001 V1.1.1 (2022-01) AI Threat Ontology

ISO/IEC8

- ISO/IEC 22989:2022 Artificial Intelligence – concepts and terminology

- ISO/IEC DIS 23894 – Information technology – Artificial intelligence – Risk management – under development

- ISO/IEC TR 24028:2020 – Information technology – Artificial intelligence – Overview of trustworthiness in artificial intelligence

IEEE9

- IEEE 7000 Standards for Building Ethical Systems

- IEEE 2894 Guide for an Architectural Framework for Explainable Artificial Intelligence

- IEEE 2941 Approved Draft Standard for Artificial Intelligence (AI) Model Representation, Compression, Distribution and Management

It’s important to note that the list provided is not conclusive – due to the relevance of the topic, many studies are currently being conducted and standards defining the security of artificial intelligence are being developed.

Summarising the current standards, it is thought that mapping them into the different stages of the AI life cycle holds significant importance. By doing so, organisations can effectively integrate security and trustworthiness measures into each phase of the AI development process. Aligning the standards with the AI life cycle stages enables organisations to identify potential risks and vulnerabilities early on, implement appropriate security controls, and maintain a continuous focus on maintaining the security and trustworthiness of AI systems throughout their lifecycle.

An AI-related standards alongside the AI lifecycle10 is provided in Table 3.

Table 3. AI-related standards alongside the AI lifecycle

| Area | Design | Development | Deployment | Monitoring |

|---|---|---|---|---|

| Standards | ETSI GR SAI 0006 V1.1.1 (2022-03) Securing Artificial Intelligence (SAI); The role of hardware in security of AI | ISO/IEC TR 24029-1:2021 Artificial Intelligence (AI) – Assessment of the robustness of neural networks | ISO/IEC DTR 24368 Information technology – Artificial intelligence – Overview of ethical and societal concerns | ETSI GR SAI 005 V1.1.1 (2021-03) Securing Artificial Intelligence |

| Standards | ETSI GR SAI 001 V1.1.1 (2022-01) Securing Artificial Intelligence (SAI) AI Threat Ontology | IESO/IEC DTR 24368 Information technology – Artificial intelligence – Overview of ethical and societal concerns | ISO/IEC CD 42001.2 Information technology – Artificial intelligence – Management system | |

| Standards | ETSI GR SAI 004 V1.1.1 (20220-12) Securing Artificial Intelligence (SAI); Problem Statement | IEEE 2976 eXplainable Artificial Intelligence – for Achieving Clarity and Interoperability of AI Systems Design | IEEE 2941 – 2021 – IEEE Standard for Artificial Intelligence (AI) Model representation, Compression, Distribution, and Management | |

| Standards | ETSI GR SAI 002 V1.1.1 (2021-08) Securing Artificial Intelligence (SAI); Data Supply Chain Security | |||

| Standards | IEEE 2976 eXplainable Artificial Intelligence – for Achieving Clarity and Interoperability of AI Systems Design | |||

| Standards | ISO/IEC TR 24028:2020 – Information technology – AI – Overview of trustworthiness in artificial intelligence | |||

| Standards | ISO/IEC DTR 27563 – Security and privacy in artificial intelligence use cases – Best practices |

Practical OWASP testing:

In addition to possible LLM vulnerabilities, it is important to note the daily-use generated codes security according to the OWASP Top 10. In terms of DevOps perspective, two cases were analysed:

- Starting a web server using ChatGPT;

- Starting an OWASP-compliant web server using ChatGPT.

Starting a web server using ChatGPT

The query to the ChatGPT was: “start a web server”.

Generated code is shown in Figure 1.

from http.server input HTTPServer, SimpleHTTPRequestHandler # Set the port you want to run the server on. Default is 8000. port = 8000 # Create a web server and define the handler to manage the incoming request httpd = HTTPServer(('', port), SimpleHTTPRequestHandler) # Print a message to indicate the server is running print(f"Server running on port {port}") # Start the web server httpd.serve_forever()

Fig 1. Starting a web server using ChatGPT

In addition to the generated code, ChatGPT generates an explanatory output, shown in Fig. 2.

This code starts a basic HTTP server on the default port 8000. You can change the port number by modifying the port variable. The server serves the files in the current directory and any subdirectories. Once the server is started, you can visit http://localhost:8000 in your browser to view the files being served. Fig. 2. Explanatory output generated by the ChatGPT

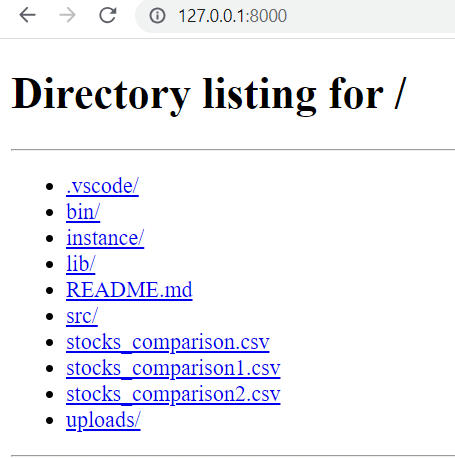

The code was executed successfully, a web server started and was accessible via the provided address: http://localhost:8000 (equivalent to http://127.0.0.1:8000). The web server has listed content of the directory where it was launched. The result is shown in Fig. 3.

Fig. 3. The listing of the creator web server

The folder contents were listed and the server was accessible remotely.

The webserver was scanned using Nikto security scanner. The relevant output generated by Nikto is shown in Table 3.

Table 3. Output of Nikto security scanner

| Server: SimpleHTTP/0.6 Python/3.11.4+ The anti-clickjacking X-Frame-Options header is not present.+ The X-XSS-Protection header is not defined. This header can hint to the user agent to protect against some forms of XSS+ The X-Content-Type-Options header is not set. This could allow the user agent to render the content of the site in a different fashion to the MIME type+ SimpleHTTP/0.6 appears to be outdated (current is at least 1.2)+ /./: Appending ‘/./’ to a directory allows indexing |

In addition to the items found, the SimpleHTTP version was found to be outdated. Asking ChatGPT what is the current version, the output was “The current version of SimpleHTTP is 0.6.1.”.

Starting an OWASP-compliant web server using ChatGPT

The query to the ChatGPT was: “start an owasp compatible webserver”.

Generated code is shown in Figure 4.

from http.server input HTTPServer, SimpleHTTPRequestHandler import os import http.server import socketserver class OWASPServer(http.server.SimpleHTTPRequestHandler): def end_headers(self): self.send_header('X-Content-Type-Options', 'nosniff') self.send_header('X-Frame-Options', 'DENY') self.send_header('Content-Security-Policy', "default-src 'self'") http.server.SimpleHTTPRequestHandler.end_headers(self) # Set the port you want to run the server on. Default is 8000. port = 8000 # Get the current directory dir_path = os.path.dirname(os.path.realpath(__file__)) # Set up the web server with the OWASP security configuration httpd = socketserver.TCPServer(('localhost', port), OWASPServer) # Change to the web directory os.chdir(dir_path) # Print a message to indicate the server is running print(f"Server running on port {port}") # Start the web server httpd.serve_forever()

Fig 4.Starting an OWASP-compliant web server using ChatGPT

In addition to the generated code, ChatGPT generates an explanatory output, shown in Fig. 5.

This code sets up an OWASP-compatible security configuration for the server by sending security-related headers with each response. The end_headers method in the OWASPServer class is called after headers are sent to the client, and it appends the additional security-related headers.

The server runs on the default port 8000, and serves the files in the current directory, which is determined using the os module. Once the server is started, you can visit http://localhost:8000 in your browser to view the files being served. Fig. 5. Explanatory output generated by the ChatGPT

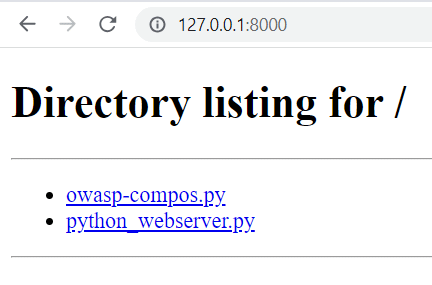

The code was executed successfully, a web server started and was accessible via the provided address. Compared to the server above, the configuration was stricter and required changes in a code allowing the server to be accessible remotely. Only a limited file listing was shown. The result is shown in Fig. 6.

Fig. 6. The listing of the creator web server

The webserver was scanned using Nikto security scanner. The relevant output generated by Nikto is shown in Table 4.

Table 4. Output of Nikto security scanner

| + Server: SimpleHTTP/0.6 Python/3.11.4+ The X-XSS-Protection header is not defined. This header can hint to the user agent to protect against some forms of XSS+ No CGI Directories found (use ‘-C all’ to force check all possible dirs)+ SimpleHTTP/0.6 appears to be outdated (current is at least 1.2)+ /./: Appending ‘/./’ to a directory allows indexing |

A polling system case

ChatGPT was tasked with developing a web polling system, and it successfully created and launched the system. However, it has come to light that the proposed Flask versions used in the implementation were outdated and vulnerable. The proposed versions are shown in Table 5.

Table 5. Proposed old and vulnerable Flask versions

| No | Package name | Version | Current version |

|---|---|---|---|

| 1 | Flask | 2.0.1 | 2.3.2 |

| 2 | Flask-SQLAlchemy | 2.5.1 | 3.0.1 |

This discovery raises concerns about the security and integrity of the system. Urgent action is required to update and strengthen the system’s security measures to ensure the protection of user data and prevent potential breaches.

Conclusions

In conclusion, the LLM is an exceptional tool that has shown great potential. However, being a relatively new technology, there are still inconsistencies and challenges in the field of cybersecurity. To address these concerns, it is crucial to prioritise the verification of the LLM’s code to ensure the development of reliable and functional solutions. By implementing rigorous code verification processes, we can enhance the overall security and reliability of LLM-based systems, enabling us to leverage their benefits while minimising potential risks.

If you’re interested in learning more about our exploration of AI for development, see our previous articles in the ‘New Beings’ series.

1 UNESCO.Ethics of Artificial Intelligence. https://www.unesco.org/en/artificial-intelligence/recommendation-ethics

2 European Parliament. https://www.europarl.europa.eu/news/en/headlines/society/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence

3 ENISA report Securing Machine Learning Algorithms. https://www.enisa.europa.eu/publications/securing-machine-learning-algorithms

4 OWASP Foundation. https://owasp.org/www-project-top-10-for-large-language-model-applications

5 Hadess. https://hadess.io/owasp-top-10-for-large-language-model-applications/

6 ENISA. Multilayer Framework for Good Cybersecurity Practices for AI. https://www.enisa.europa.eu/publications/multilayer-framework-for-good-cybersecurity-practices-for-ai

7 ETSI ISG. https://www.etsi.org/committee/1640-sai

8 ISO/IEC. https://www.iso.org

9 Institute of Electrical and Electronics Engineers IEEE. https://www.ieee.org

10 ENISA. A Multilayer Framework For Good Cybersecurity Practices For AI. https://www.enisa.europa.eu/publications/multilayer-framework-for-good-cybersecurity-practices-for-ai